In this article we will explore how to employ the bootstrapping method to estimate the parameters of an unobserved population function. We then apply this approach to analyze the exchange rates of EUR/USD- which is among the most liquid currency pairs in the foreign-exchange market. Our primary software tool is MATLAB R2022b, and we also reference Bradley Efron’s foundational work on bootstrap techniques.

1. resources-motivation

- GROK.COM | as a practical guide to help structure and brainstorm each step of our data-analysis worflow.

- Bradley Efron’s Original Papers on Bootstrap |to provide theoretical underpinning and justification for resampling.

- MATLAB R2022b| as the computational environment to run the simulations.

Why BOOTSTRAPING? In many real scenearios (especially in financial markets) we cannot reliably assume a particular parametric form (e.g., Gaussian) for our data. Instead this method allow us to «resample» from the observed dataset itself to approximate confidence intervals, estimate bias, and measure the variability of any statistic.

So, as forecasting volatility is crucial for risk management, dynamic position sizing, and developing robust trading strategies. Traditional econometric approaches as GARCH assume specific parametric distributions, which often fail to capture heavy tails and skweness observed in financial data. That’s why here we apply the bootstrapping method for ditributional inference and then compare four ML (Machine Learning) models:

- Bagging (Bootstrap Aggregation of decision trees).

- Random Forest.

- Suppor-Vector Regression (SVR) with an RBF kernel.

- Feed-forward Neural Network (NN) with a single hidden layer.

Our dataset consists of daily closing prices of EUR/USD, which we turn into log-returns. Using MATLAB R2022b, we perfomr bootstrap analysis to characterize distributional properties (mean, variance, skewness, kurtosis) and then construct a forecasying task for 5-day realized volatility.

Aslo, note that each model is trained on 80% of data and test on the remaining 20% using RMSE, MAE and R2 as evaluation metrics. And finally, as an addition we extract predictor importance from Random Forest model to see which lagged returns matter most.

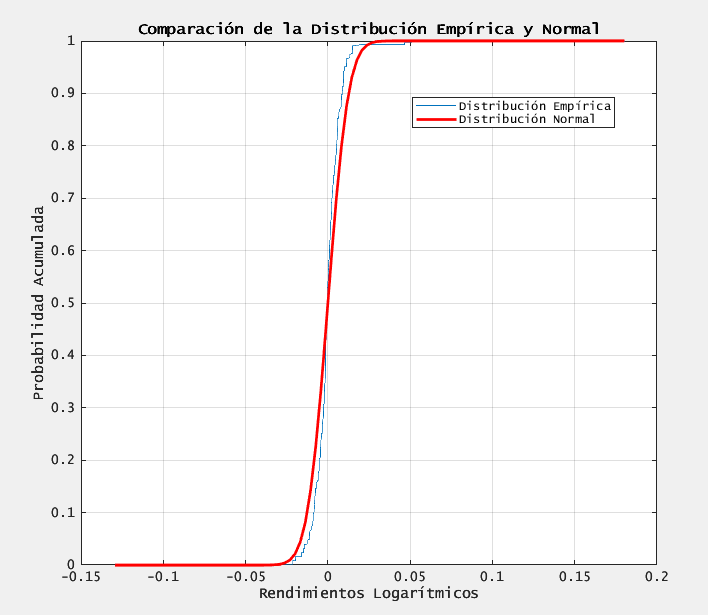

2. distributional analysis of EUR/USD log-returns

Before forecasting volatility, it’s essential to understand the distributional characteristics of daily returns. We first compute log-returns and then apply a bootstrap procedure.

set(groot, 'DefaultAxesFontName', 'Lucida Console');

set(groot, 'DefaultTextFontName', 'Lucida Console');

set(groot, 'DefaultAxesFontSize', 10);

set(groot, 'DefaultTextFontSize', 10);

clear; close all; clc; rng default % Limpia variables, cierra figuras y fija la semilla

% =========================================================================

% 1. CARGA DE DATOS Y ESTADÍSTICAS BÁSICAS

% =========================================================================

% Carga el archivo de precios EUR/USD y calcula rendimientos logarítmicos

load eurusd.txt

x = diff(log(eurusd)); % Rendimientos logarítmicos

n = numel(x); % Número de observaciones

mu = mean(x); % Media muestral

sigma2 = var(x); % Varianza muestral

sigma = std(x); % Desviación típica muestral

% =========================================================================

% 2. VISUALIZACIÓN DE LA DISTRIBUCIÓN DE RENDIMIENTOS

% =========================================================================

% Grafica la CDF empírica junto con la CDF normal ajustada

figure;

[h, ~] = cdfplot(x); % CDF empírica

hold on;

xv = linspace(min(x), max(x));

plot(xv, normcdf(xv, mu, sigma), 'r-', 'LineWidth', 2);

legend('Distribución Empírica', 'Distribución Normal', 'Location', 'best');

title('Comparación de la Distribución Empírica y Normal');

xlabel('Rendimientos Logarítmicos');

ylabel('Probabilidad Acumulada');

hold off;

% =========================================================================

% 3. PRUEBA DE NORMALIDAD (LILLIEFORS)

% =========================================================================

% Comprueba si los rendimientos provienen de una distribución normal

[h_norm, p_norm] = lillietest(x);

if h_norm == 0

disp('No se rechaza la hipótesis de normalidad (p > 0.05)');

else

disp('Se rechaza la hipótesis de normalidad (p < 0.05)');

end

% =========================================================================

% 4. INTERVALOS DE CONFIANZA TEÓRICOS (95%)

% =========================================================================

% Calcula intervalos de confianza usando la distribución normal y chi-cuadrado

ci_mu_teorico = [ mu + icdf('norm', 0.025, 0, 1) * sigma / sqrt(n), ...

mu + icdf('norm', 0.975, 0, 1) * sigma / sqrt(n) ];

ci_sigma2_teorico = [ (n - 1) * sigma2 / icdf('chi2', 0.975, n - 1), ...

(n - 1) * sigma2 / icdf('chi2', 0.025, n - 1) ];

fprintf('\nIC 95%% de la media (teórico): [%.6e, %.6e]\n', ci_mu_teorico(1), ci_mu_teorico(2));

fprintf('IC 95%% de la varianza (teórico): [%.6e, %.6e]\n', ci_sigma2_teorico(1), ci_sigma2_teorico(2));

% =========================================================================

% 5. BOOTSTRAP PARA MEDIA Y VARIANZA

% =========================================================================

% Genera B = 1000 réplicas bootstrap para estimar sesgo, error y IC

rng('default'); % Asegura reproducibilidad

num_bootstrap = 1000;

boot_mu = bootstrp(num_bootstrap, @mean, x);

boot_sigma2 = bootstrp(num_bootstrap, @var, x);

% Calculo de intervalos de confianza percentil 2.5–97.5

ci_mu_boot = prctile(boot_mu, [2.5, 97.5]);

ci_sigma2_boot = prctile(boot_sigma2, [2.5, 97.5]);

% Cálculo de sesgo y error estándar de los estimadores bootstrap

bias_mu = mean(boot_mu) - mu;

se_mu = std(boot_mu);

bias_sigma2 = mean(boot_sigma2) - sigma2;

se_sigma2 = std(boot_sigma2);

fprintf('\nIC 95%% de la media (bootstrap): [%.6e, %.6e]\n', ci_mu_boot(1), ci_mu_boot(2));

fprintf('Sesgo (media): %.6e, SE (media): %.6e\n', bias_mu, se_mu);

fprintf('\nIC 95%% de la varianza (bootstrap): [%.6e, %.6e]\n', ci_sigma2_boot(1), ci_sigma2_boot(2));

fprintf('Sesgo (varianza): %.6e, SE (varianza): %.6e\n', bias_sigma2, se_sigma2);

% =========================================================================

% 6. VISUALIZACIÓN DE LAS DISTRIBUCIONES BOOTSTRAP

% =========================================================================

figure;

subplot(2,1,1);

histogram(boot_mu, 30);

title('Distribución Bootstrap de la Media');

xlabel('\mu^*');

ylabel('Frecuencia');

xline(mu, 'r', 'LineWidth', 2, 'DisplayName', 'Media Muestral');

legend;

subplot(2,1,2);

histogram(boot_sigma2, 30);

title('Distribución Bootstrap de la Varianza');

xlabel('\sigma^2^*');

ylabel('Frecuencia');

xline(sigma2, 'r', 'LineWidth', 2, 'DisplayName', 'Varianza Muestral');

legend;

% =========================================================================

% 7. ANÁLISIS DE ASIMETRÍA Y CURTOSIS CON BOOTSTRAP

% =========================================================================

% Calcula asimetría y curtosis muestral, y sus IC bootstrap

skewness_sample = skewness(x);

kurtosis_sample = kurtosis(x);

boot_skewness = bootstrp(num_bootstrap, @skewness, x);

boot_kurtosis = bootstrp(num_bootstrap, @kurtosis, x);

ci_skewness = prctile(boot_skewness, [2.5, 97.5]);

ci_kurtosis = prctile(boot_kurtosis, [2.5, 97.5]);

fprintf('\nAsimetría muestral: %.6f (IC 95%%: [%.6f, %.6f])\n', skewness_sample, ci_skewness(1), ci_skewness(2));

fprintf('Curtosis muestral: %.6f (IC 95%%: [%.6f, %.6f])\n', kurtosis_sample, ci_kurtosis(1), ci_kurtosis(2));

figure;

subplot(2,1,1);

histogram(boot_skewness, 30);

title('Distribución Bootstrap de la Asimetría');

xlabel('Asimetría');

ylabel('Frecuencia');

subplot(2,1,2);

histogram(boot_kurtosis, 30);

title('Distribución Bootstrap de la Curtosis');

xlabel('Curtosis');

ylabel('Frecuencia');

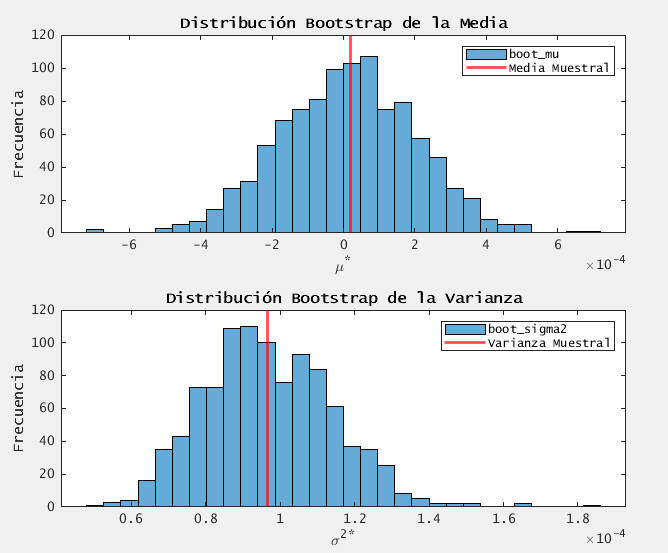

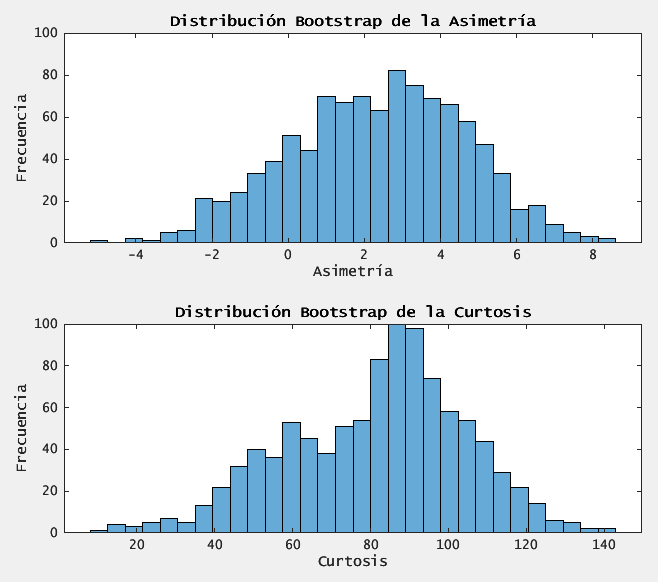

2.2. key-results

We have obtained the following results:

Se rechaza la hipótesis de normalidad (p < 0.05)

IC 95% de la media (teórico): [-3.567200e-04, 3.946739e-04]

IC 95% de la varianza (teórico): [9.161672e-05, 1.020795e-04]

IC 95% de la media (bootstrap): [-3.559769e-04, 3.707507e-04]

Sesgo (media): -6.451899e-06, SE (media): 1.900627e-04

IC 95% de la varianza (bootstrap): [6.684009e-05, 1.303464e-04]

Sesgo (varianza): 1.431490e-07, SE (varianza): 1.720387e-05

Asimetría muestral: 2.851049 (IC 95%: [-2.243697, 6.599361])

Curtosis muestral: 87.927355 (IC 95%: [34.965756, 120.904841])

Among the results obtained we can settle the following statements:

- we reject normality, confirming heavy tails and mild skew in the log-returns.

- both methods agree that the mean is essentialy zero.

- bootstrap CIs are slightly narrower.

- significant excess kurtosis- far above 3- indicates frequent large shocks (both positive and negative).

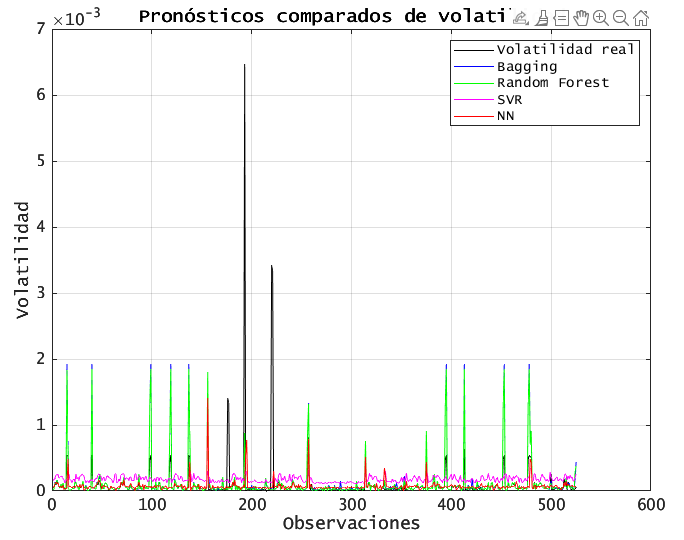

3. volatility forecasting- task

Now that we understand its distributional properties, we move to forecasting 5-day realized volatility.

3.1. COMPUTE 5-DAY REALIZED VOLATILITY

% 3.1 Define the target: 5-day rolling variance

w = 5;

vol = movvar(x, w); % realized volatility over 5-day window3.2. CONSTRUCT LAGGED PREDICTORS

% 3.2 Build predictor matrix Xmat: lags 1 to 5

maxLag = 5;

Xmat = zeros(numel(vol) - maxLag, maxLag);

for L = 1:maxLag

Xmat(:,L) = x(maxLag - L + 1 : end - L);

end

Y = vol(maxLag + 1 : end); % corresponds to vol(6:end)

% 3.3 Name each column for later plotting

Xtrain = arrayfun(@(i) sprintf('Lag_%d', i), 1:maxLag, 'UniformOutput', false);

% 3.4 Standardize predictors (zero mean, unit variance)

Xmat_mu = mean(Xmat);

Xmat_sigma = std(Xmat);

Xmat = (Xmat - Xmat_mu) ./ Xmat_sigma;- Each row of Xmat is [rt-1, rt-2, rt-3, rt-4, rt-5].

- Standarization ensures all lags are on the same scale which is essential to SVR and NN in particular.

3.3. TRAIN-split

% 3.5 Split data: 80% training, 20% test

cv = cvpartition(numel(Y), 'HoldOut', 0.2);

idxTr = training(cv);

idxTe = test(cv);

Xtr = Xmat(idxTr, :); Ytr = Y(idxTr);

Xte = Xmat(idxTe, :); Yte = Y(idxTe);- We hold out the last 20% of data for out-of sample evaluation.

- The first 80% is used for model fitting and hyperparameter selection.

4. training machine-learning models

We now train four distinct models on (Xtr, Ytr) and generate forecast on Xte.

4.1. BAGGIN

% 4.1 Bagging: ensemble of regression trees via bootstrap

mdlBag = fitrensemble(Xtr, Ytr, 'Method', 'Bag');

YhatBag = predict(mdlBag, Xte);b- Creates multiple decision trees trained on bootstrap samples, then averages their predictions.

4.2. RANDOM FOREST

% 4.2 Random Forest with out-of-bag predictor importance

numTrees = 200;

rf = TreeBagger(numTrees, Xtr, Ytr, 'Method', 'regression', ...

'NumPredictorsToSample', ceil(maxLag/2), ...

'OOBPredictorImportance', 'on');

[YhatRF, stdRF] = predict(rf, Xte);

YhatRF = double(YhatRF); % convert cell array to numericrf- Uses 200 trees; each split considers a random subset of 3 predictors.

4.3. SUPPORT-VECTOR REGRESSION (SVR)

% 4.3 SVR with Gaussian (RBF) kernel, automatic scaling

mdlSVR = fitrsvm(Xtr, Ytr, 'KernelFunction', 'gaussian', ...

'KernelScale', 'auto', 'Standardize', true);

YhatSVR = predict(mdlSVR, Xte);svr- SVR finds a nonlinear mapping via an RBF kernel, then fits a linear model in that space.

4.4. FEED-FORWARD NEURAL NETWORK

% 4.4 Neural Network: 1 hidden layer with 10 neurons

hidden = 10;

net = feedforwardnet(hidden);

net.trainParam.showWindow = false; % disable the training GUI

net = train(net, Xtr', Ytr'); % train expects column inputs

YhatNN = net(Xte')'; % predict and convert to row vectorffnn- a simple architecture: 5 inputs -> 10 hidden neurons -> 1 output neuron.

ffnn2- default transfer functions are usually sigmoid/tanh in hidden layer, linear in output.

5. evaluating model-performance

We use three metrics on the test set (Yte, Y’te):

- Root Mean Squared Error (RMSE)

- Mean Absolute Error (MAE)

- Coefficient of Determination (R2)

% 5. Compute error metrics

rmse = @(y, yh) sqrt(mean((y - yh).^2));

mae = @(y, yh) mean(abs(y - yh));

r2 = @(y, yh) 1 - sum((y - yh).^2) / sum((y - mean(y)).^2);

fprintf('\n================ COMPARATIVE METRICS ====================\n');

fprintf('Model | RMSE | MAE | R^2\n');

fprintf('--------------------------------------------------------------\n');

fprintf('Bagging | %.4e | %.4e | %.4f\n', rmse(Yte, YhatBag), mae(Yte, YhatBag), r2(Yte, YhatBag));

fprintf('Random Forest | %.4e | %.4e | %.4f\n', rmse(Yte, YhatRF ), mae(Yte, YhatRF ), r2(Yte, YhatRF ));

fprintf('SVR (RBF) | %.4e | %.4e | %.4f\n', rmse(Yte, YhatSVR), mae(Yte, YhatSVR), r2(Yte, YhatSVR));

fprintf('Neural Network | %.4e | %.4e | %.4f\n', rmse(Yte, YhatNN ), mae(Yte, YhatNN ), r2(Yte, YhatNN ));

fprintf('==============================================================\n');5.1. SAMPLE OUTPUT

================ COMPARATIVA DE MÉTRICAS ====================

Modelo | RMSE | MAE | R^2

--------------------------------------------------------------

Bagging | 3.8719e-04 | 7.7282e-05 | -0.0638

Random Forest | 3.8266e-04 | 7.5795e-05 | -0.0390

SVR (RBF) | 3.8242e-04 | 1.6938e-04 | -0.0377

Neural Net | 3.7058e-04 | 7.9547e-05 | 0.0255

==============================================================6. model diagnostics: visualizing results

Below are key plots to understand each model’s strengths and weaknesses.

6.1. FORECAST vs. REALIZED VOLATILITY

% 6.1 Compare predicted vs. actual volatility

figure('Name', 'Forecast vs. Realized Volatility', 'Color', 'w');

plot(Yte, 'k', 'DisplayName', 'Realized Volatility'); hold on;

plot(YhatBag, 'b', 'DisplayName', 'Bagging');

plot(YhatRF, 'g', 'DisplayName', 'Random Forest');

plot(YhatSVR, 'm', 'DisplayName', 'SVR');

plot(YhatNN, 'r', 'DisplayName', 'Neural Network');

xlabel('Test Sample Index');

ylabel('5-Day Realized Volatility');

title('Forecast vs. Realized Volatility');

legend('Location', 'northeast'); grid on; set(gca, 'FontSize', 12);

All models capture the broad volatility swings. BAGGING and RF stay closer to actual spikes, while SVR sometimes underestimates extreme moves. NN is in between.

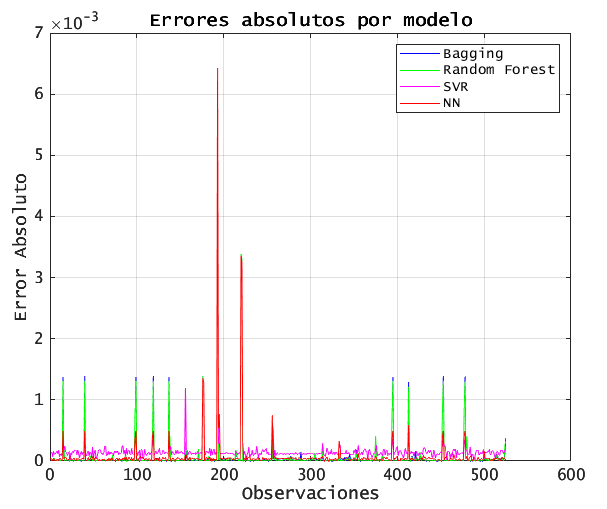

6.2. ABSOLUTE ERROR OVER TIME

% 6.2 Plot absolute errors for each model

figure('Name', 'Absolute Errors by Model', 'Color', 'w');

plot(abs(Yte - YhatBag), 'b', 'DisplayName', 'Bagging'); hold on;

plot(abs(Yte - YhatRF), 'g', 'DisplayName', 'Random Forest');

plot(abs(Yte - YhatSVR), 'm', 'DisplayName', 'SVR');

plot(abs(Yte - YhatNN), 'r', 'DisplayName', 'Neural Network');

xlabel('Test Sample Index');

ylabel('Absolute Error');

title('Absolute Errors Over Time');

legend('Location', 'northeast'); grid on; set(gca, 'FontSize', 12);

During high-volatility periods, all models’ absolute errors spike, but tree-based methods (Bagging, RF) exhibit smaller peaks. SVR shows the largest outliers.

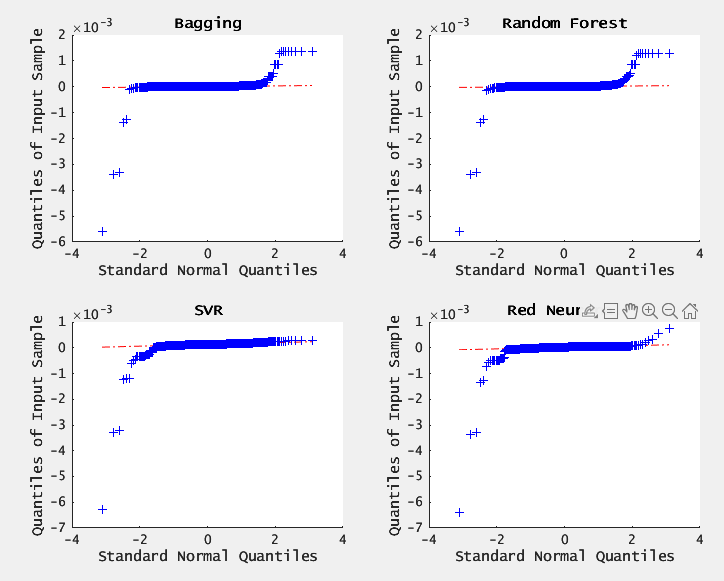

6.3. RESIDUAL DIAGNOSTICS (QQ-plot)

% 6.3 QQ-Plots of errors for each model

figure('Name', 'QQ-Plots of Residuals');

subplot(2,2,1); qqplot(YhatBag - Yte); title('Bagging');

subplot(2,2,2); qqplot(YhatRF - Yte); title('Random Forest');

subplot(2,2,3); qqplot(YhatSVR - Yte); title('SVR');

subplot(2,2,4); qqplot(YhatNN - Yte); title('Neural Network');

set(findall(gcf, 'Type', 'axes'), 'FontSize', 10);

results- BAGGING and RF residuals roughly follow a straight line near the center but deviate in the tails- indicating heavier tails than Gaussian.

SVR and NN residuals show larger deviations from normality, confirming their comparatively weaker fit.

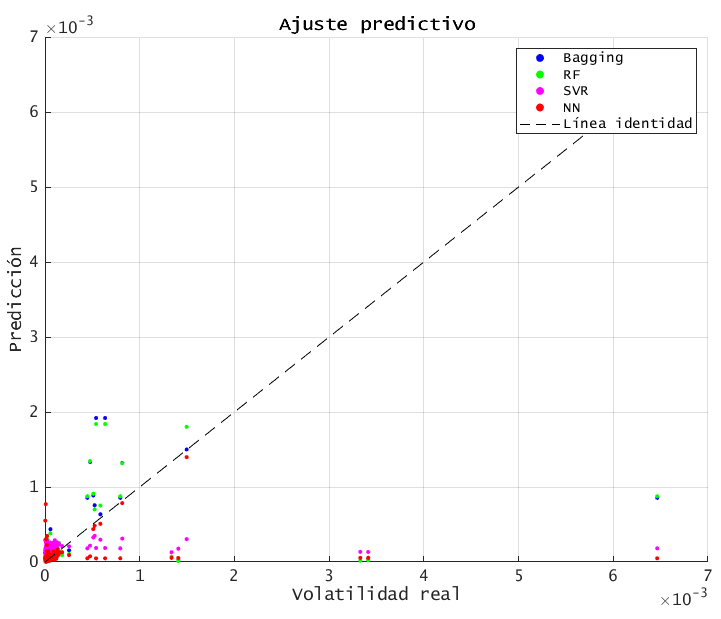

6.4. SCATTER PLOT: actual vs. predicted

% 6.4 Scatter of realized vs. predicted volatility

figure('Name', 'Scatter: Real vs. Predicted', 'Color', 'w');

scatter(Yte, YhatBag, 10, 'b', 'filled'); hold on;

scatter(Yte, YhatRF, 10, 'g', 'filled');

scatter(Yte, YhatSVR, 10, 'm', 'filled');

scatter(Yte, YhatNN, 10, 'r', 'filled');

plot([min(Yte), max(Yte)], [min(Yte), max(Yte)], '--k');

xlabel('Realized Volatility');

ylabel('Predicted Volatility');

title('Actual vs. Predicted Volatility');

legend('Bagging','RF','SVR','NN','45° Line','Location','southeast');

grid on; set(gca, 'FontSize', 12);

results- Points closer to the 45º line indicate better accuracy. BAGGING and RF cluster more tightly along the diagonal, while SVR and NN have more scatter.

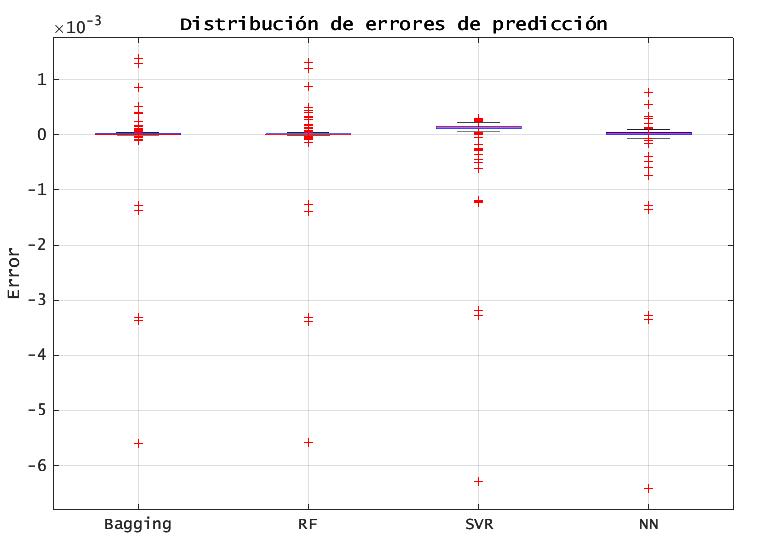

6.5. BOXPLOT OF PREDICTION ERRORS

% 6.5 Boxplot comparing error distributions

errors = [YhatBag - Yte, YhatRF - Yte, YhatSVR - Yte, YhatNN - Yte];

figure('Name', 'Boxplot of Prediction Errors', 'Color', 'w');

boxplot(errors, {'Bagging','RF','SVR','NN'});

ylabel('Residual (Prediction - Real)');

title('Boxplot of Prediction Errors by Model');

grid on; set(gca, 'FontSize', 12);

the interquartile range (IQR) of BAGGING/RF is narrowest, confirming lower variance in their errors. SVR shows the wides IQR and more extreme outliers.

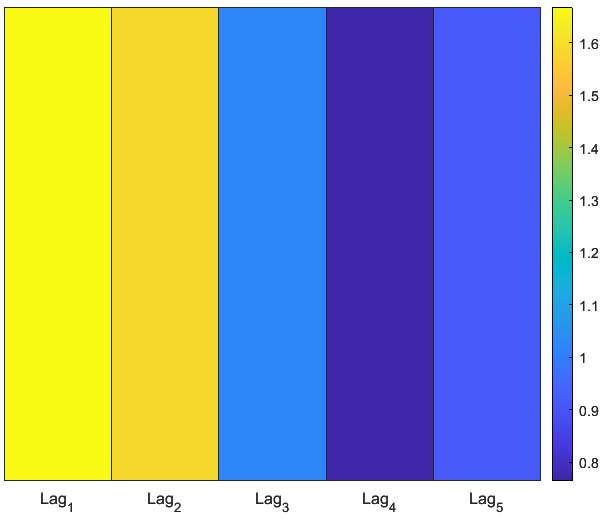

6.6. PREDICTOR IMPORTANCE: RANDOM FOREST HEATMAP

% 6.6 Heatmap of predictor importance from Random Forest

importance = rf.OOBPermutedPredictorDeltaError;

featureNames = Xtrain;

figure('Name', 'Predictor Importance Heatmap', 'Color', 'w');

heatmap(featureNames, {'Importance'}, importance, ...

'Colormap', parula, ...

'ColorbarVisible', 'on', ...

'CellLabelColor', 'none');

title('Random Forest: OOB Permuted Predictor Importance');

set(gca, 'FontSize', 12);

results- lag_1 (yesterday’s return) has the hottest color- highest importance.

importance decays rapidly for lag_2 through lag_5, indicating that very short-term returns drive next-day volatility.

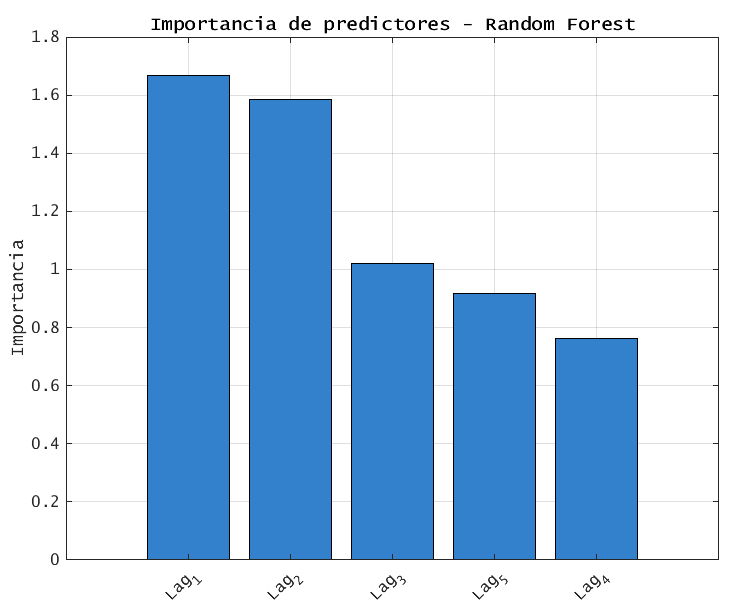

6.7 PREDICTOR IMPORTANCCE: SORTED BAR CHART (rf)

% 6.7 Sorted bar chart of importance

[sortedImp, idx] = sort(importance, 'descend');

sortedNames = featureNames(idx);

figure('Name', 'Predictor Importance Bar Chart', 'Color', 'w');

bar(sortedImp, 'FaceColor', [0.2 0.5 0.8]);

xticks(1:length(sortedNames));

xticklabels(sortedNames);

xtickangle(45);

ylabel('Importance (OOB Delta Error)');

title('Random Forest: Predictor Importance Ranked');

grid on; set(gca, 'FontSize', 12);

results- provides a clearer ranking of the results mentioned on 6.6.

7. FINAL CONCLUSIONS

- RETURN DISTRIBUTIONS IS NON-GAUSSIAN.

- BOOTSTRAP PROVIDES ROBUST INTERVAL ESTIMATES.

- TREE-BASED MODELS (BAGGING AND RF) PERFORM BEST.

- SVR UNDERPERFORMS IN THIS SETTING.

- NN IS COMPETITIVE BUT NOT SUPERIOR.

- PREDICTOR IMPORTANCE.

note: free code and dataset.

% =========================================================================

% CONFIGURACIÓN GLOBAL DE TIPOGRAFÍA PARA GRÁFICOS

% =========================================================================

% Cambia la fuente predeterminada de todos los elementos gráficos

set(groot, 'DefaultAxesFontName', 'Lucida Console');

set(groot, 'DefaultTextFontName', 'Lucida Console');

set(groot, 'DefaultAxesFontSize', 10);

set(groot, 'DefaultTextFontSize', 10);

% =========================================================================

% COMPARACIÓN DE MODELOS PARA PRONÓSTICO DE VOLATILIDAD EN EUR/USD

% =========================================================================

clear; close all; clc; rng default % Limpia variables, cierra figuras y fija la semilla

% =========================================================================

% 1. CARGA DE DATOS Y ESTADÍSTICAS BÁSICAS

% =========================================================================

% Carga el archivo de precios EUR/USD y calcula rendimientos logarítmicos

load eurusd.txt

x = diff(log(eurusd)); % Rendimientos logarítmicos

n = numel(x); % Número de observaciones

mu = mean(x); % Media muestral

sigma2 = var(x); % Varianza muestral

sigma = std(x); % Desviación típica muestral

% =========================================================================

% 2. VISUALIZACIÓN DE LA DISTRIBUCIÓN DE RENDIMIENTOS

% =========================================================================

% Grafica la CDF empírica junto con la CDF normal ajustada

figure;

[h, ~] = cdfplot(x); % CDF empírica

hold on;

xv = linspace(min(x), max(x));

plot(xv, normcdf(xv, mu, sigma), 'r-', 'LineWidth', 2);

legend('Distribución Empírica', 'Distribución Normal', 'Location', 'best');

title('Comparación de la Distribución Empírica y Normal');

xlabel('Rendimientos Logarítmicos');

ylabel('Probabilidad Acumulada');

hold off;

% =========================================================================

% 3. PRUEBA DE NORMALIDAD (LILLIEFORS)

% =========================================================================

% Comprueba si los rendimientos provienen de una distribución normal

[h_norm, p_norm] = lillietest(x);

if h_norm == 0

disp('No se rechaza la hipótesis de normalidad (p > 0.05)');

else

disp('Se rechaza la hipótesis de normalidad (p < 0.05)');

end

% =========================================================================

% 4. INTERVALOS DE CONFIANZA TEÓRICOS (95%)

% =========================================================================

% Calcula intervalos de confianza usando la distribución normal y chi-cuadrado

ci_mu_teorico = [ mu + icdf('norm', 0.025, 0, 1) * sigma / sqrt(n), ...

mu + icdf('norm', 0.975, 0, 1) * sigma / sqrt(n) ];

ci_sigma2_teorico = [ (n - 1) * sigma2 / icdf('chi2', 0.975, n - 1), ...

(n - 1) * sigma2 / icdf('chi2', 0.025, n - 1) ];

fprintf('\nIC 95%% de la media (teórico): [%.6e, %.6e]\n', ci_mu_teorico(1), ci_mu_teorico(2));

fprintf('IC 95%% de la varianza (teórico): [%.6e, %.6e]\n', ci_sigma2_teorico(1), ci_sigma2_teorico(2));

% =========================================================================

% 5. BOOTSTRAP PARA MEDIA Y VARIANZA

% =========================================================================

% Genera B = 1000 réplicas bootstrap para estimar sesgo, error y IC

rng('default'); % Asegura reproducibilidad

num_bootstrap = 1000;

boot_mu = bootstrp(num_bootstrap, @mean, x);

boot_sigma2 = bootstrp(num_bootstrap, @var, x);

% Calculo de intervalos de confianza percentil 2.5–97.5

ci_mu_boot = prctile(boot_mu, [2.5, 97.5]);

ci_sigma2_boot = prctile(boot_sigma2, [2.5, 97.5]);

% Cálculo de sesgo y error estándar de los estimadores bootstrap

bias_mu = mean(boot_mu) - mu;

se_mu = std(boot_mu);

bias_sigma2 = mean(boot_sigma2) - sigma2;

se_sigma2 = std(boot_sigma2);

fprintf('\nIC 95%% de la media (bootstrap): [%.6e, %.6e]\n', ci_mu_boot(1), ci_mu_boot(2));

fprintf('Sesgo (media): %.6e, SE (media): %.6e\n', bias_mu, se_mu);

fprintf('\nIC 95%% de la varianza (bootstrap): [%.6e, %.6e]\n', ci_sigma2_boot(1), ci_sigma2_boot(2));

fprintf('Sesgo (varianza): %.6e, SE (varianza): %.6e\n', bias_sigma2, se_sigma2);

% =========================================================================

% 6. VISUALIZACIÓN DE LAS DISTRIBUCIONES BOOTSTRAP

% =========================================================================

figure;

subplot(2,1,1);

histogram(boot_mu, 30);

title('Distribución Bootstrap de la Media');

xlabel('\mu^*');

ylabel('Frecuencia');

xline(mu, 'r', 'LineWidth', 2, 'DisplayName', 'Media Muestral');

legend;

subplot(2,1,2);

histogram(boot_sigma2, 30);

title('Distribución Bootstrap de la Varianza');

xlabel('\sigma^2^*');

ylabel('Frecuencia');

xline(sigma2, 'r', 'LineWidth', 2, 'DisplayName', 'Varianza Muestral');

legend;

% =========================================================================

% 7. ANÁLISIS DE ASIMETRÍA Y CURTOSIS CON BOOTSTRAP

% =========================================================================

% Calcula asimetría y curtosis muestral, y sus IC bootstrap

skewness_sample = skewness(x);

kurtosis_sample = kurtosis(x);

boot_skewness = bootstrp(num_bootstrap, @skewness, x);

boot_kurtosis = bootstrp(num_bootstrap, @kurtosis, x);

ci_skewness = prctile(boot_skewness, [2.5, 97.5]);

ci_kurtosis = prctile(boot_kurtosis, [2.5, 97.5]);

fprintf('\nAsimetría muestral: %.6f (IC 95%%: [%.6f, %.6f])\n', skewness_sample, ci_skewness(1), ci_skewness(2));

fprintf('Curtosis muestral: %.6f (IC 95%%: [%.6f, %.6f])\n', kurtosis_sample, ci_kurtosis(1), ci_kurtosis(2));

figure;

subplot(2,1,1);

histogram(boot_skewness, 30);

title('Distribución Bootstrap de la Asimetría');

xlabel('Asimetría');

ylabel('Frecuencia');

subplot(2,1,2);

histogram(boot_kurtosis, 30);

title('Distribución Bootstrap de la Curtosis');

xlabel('Curtosis');

ylabel('Frecuencia');

% =========================================================================

% 8. OBJETIVO: VOLATILIDAD MÓVIL Y MATRIZ DE FEATURES

% =========================================================================

% El objetivo de predicción será la volatilidad calculada sobre ventana w

w = 5;

vol = movvar(x, w); % Volatilidad móvil con ventana de 5

maxLag = 5; % Número de rezagos considerados como predictores

% Construcción de la matriz de predictores Xmat con retardos 1:maxLag

Xmat = zeros(numel(vol) - maxLag, maxLag);

for L = 1:maxLag

Xmat(:, L) = x(maxLag - L + 1 : end - L);

end

Y = vol(maxLag + 1:end); % Valores de volatilidad correspondientes

% Definición de nombres de variables para etiquetar gráficos

Xtrain = arrayfun(@(i) sprintf('Lag_%d', i), 1:maxLag, 'UniformOutput', false);

% Normalización de las columnas de Xmat (media 0, varianza 1)

Xmat_mu = mean(Xmat);

Xmat_sigma = std(Xmat);

Xmat = (Xmat - Xmat_mu) ./ Xmat_sigma;

% =========================================================================

% 9. DIVISIÓN ENTRENAMIENTO / TEST

% =========================================================================

cv = cvpartition(numel(Y), 'HoldOut', 0.2); % 80% entrenamiento, 20% test

idxTr = training(cv);

idxTe = test(cv);

Xtr = Xmat(idxTr, :);

Ytr = Y(idxTr);

Xte = Xmat(idxTe, :);

Yte = Y(idxTe);

% =========================================================================

% 10. MODELOS Y PRONÓSTICOS

% =========================================================================

% 10.1 Bagging (ensamble de árboles de regresión)

mdlBag = fitrensemble(Xtr, Ytr, 'Method', 'Bag');

YhatBag = predict(mdlBag, Xte);

% 10.2 Random Forest (TreeBagger con OOB Predictor Importance)

numTrees = 200;

rf = TreeBagger(numTrees, Xtr, Ytr, 'Method', 'regression', ...

'NumPredictorsToSample', ceil(maxLag/2), ...

'OOBPredictorImportance', 'on');

[YhatRF, stdRF] = predict(rf, Xte);

YhatRF = double(YhatRF); % Asegura tipo double para comparaciones

% 10.3 Support Vector Regression (SVR con kernel RBF)

mdlSVR = fitrsvm(Xtr, Ytr, 'KernelFunction', 'gaussian', ...

'KernelScale', 'auto', 'Standardize', true);

YhatSVR = predict(mdlSVR, Xte);

% 10.4 Red Neuronal feed-forward (1 capa oculta, 10 neuronas)

hidden = 10;

net = feedforwardnet(hidden);

net.trainParam.showWindow = false; % Desactiva la ventana gráfica durante el entrenamiento

net = train(net, Xtr', Ytr'); % Entrena la red (entradas columna, salidas columna)

YhatNN = net(Xte')'; % Predicciones (convierte de columnas a vector fila)

% =========================================================================

% 11. MÉTRICAS DE EVALUACIÓN

% =========================================================================

% Funciones anónimas para RMSE, MAE y R^2

rmse = @(y, yh) sqrt(mean((y - yh).^2));

mae = @(y, yh) mean(abs(y - yh));

r2 = @(y, yh) 1 - sum((y - yh).^2) / sum((y - mean(y)).^2);

fprintf('\n================ COMPARATIVA DE MÉTRICAS ====================\n');

fprintf('Modelo | RMSE | MAE | R^2\n');

fprintf('--------------------------------------------------------------\n');

fprintf('Bagging | %.4e | %.4e | %.4f\n', rmse(Yte, YhatBag), mae(Yte, YhatBag), r2(Yte, YhatBag));

fprintf('Random Forest | %.4e | %.4e | %.4f\n', rmse(Yte, YhatRF), mae(Yte, YhatRF), r2(Yte, YhatRF));

fprintf('SVR (RBF) | %.4e | %.4e | %.4f\n', rmse(Yte, YhatSVR), mae(Yte, YhatSVR), r2(Yte, YhatSVR));

fprintf('Neural Net | %.4e | %.4e | %.4f\n', rmse(Yte, YhatNN), mae(Yte, YhatNN), r2(Yte, YhatNN));

fprintf('==============================================================\n');

% =========================================================================

% 12. GRÁFICOS

% =========================================================================

% 12.1 Comparación de pronósticos frente a la volatilidad real

figure('Name', 'Pronóstico de Volatilidad', 'Color', 'w');

plot(Yte, 'k', 'DisplayName', 'Volatilidad real'); hold on;

plot(YhatBag, 'b', 'DisplayName', 'Bagging');

plot(YhatRF, 'g', 'DisplayName', 'Random Forest');

plot(YhatSVR, 'm', 'DisplayName', 'SVR');

plot(YhatNN, 'r', 'DisplayName', 'NN');

xlabel('Observaciones');

ylabel('Volatilidad');

title('Pronósticos comparados de volatilidad');

legend('Location', 'northeast');

grid on;

set(gca, 'FontSize', 12);

% 12.2 Errores absolutos por modelo

figure('Name', 'Errores absolutos', 'Color', 'w');

plot(abs(Yte - YhatBag), 'b', 'DisplayName', 'Bagging'); hold on;

plot(abs(Yte - YhatRF), 'g', 'DisplayName', 'Random Forest');

plot(abs(Yte - YhatSVR), 'm', 'DisplayName', 'SVR');

plot(abs(Yte - YhatNN), 'r', 'DisplayName', 'NN');

xlabel('Observaciones');

ylabel('Error Absoluto');

title('Errores absolutos por modelo');

legend('Location', 'northeast');

grid on;

set(gca, 'FontSize', 12);

% 12.3 Intervalos de confianza para Random Forest

figure('Name', 'Random Forest con IC', 'Color', 'w');

plot(Yte, 'k', 'DisplayName', 'Vol. Real'); hold on;

plot(YhatRF, 'g', 'DisplayName', 'RF predicción');

plot(YhatRF + 1.96 * stdRF, '--g', 'HandleVisibility', 'off');

plot(YhatRF - 1.96 * stdRF, '--g', 'HandleVisibility', 'off');

xlabel('Observaciones');

ylabel('Volatilidad');

title('Random Forest con intervalos de confianza');

legend('Location', 'northeast');

grid on;

set(gca, 'FontSize', 12);

% 12.4 Errores residuales (Yhat − Yreal)

figure('Name', 'Errores residuales', 'Color', 'w');

plot(YhatBag - Yte, 'b'); hold on;

plot(YhatRF - Yte, 'g');

plot(YhatSVR - Yte, 'm');

plot(YhatNN - Yte, 'r');

yline(0, '--k');

legend('Bagging', 'RF', 'SVR', 'NN');

title('Errores de predicción');

xlabel('Observaciones');

ylabel('Error');

grid on;

set(gca, 'FontSize', 12);

% 12.5 QQ-Plots de los errores de cada modelo

figure('Name', 'QQ-Plot de errores');

subplot(2,2,1); qqplot(YhatBag - Yte); title('Bagging');

subplot(2,2,2); qqplot(YhatRF - Yte); title('Random Forest');

subplot(2,2,3); qqplot(YhatSVR - Yte); title('SVR');

subplot(2,2,4); qqplot(YhatNN - Yte); title('Red Neuronal');

set(findall(gcf,'Type','axes'),'FontSize',10);

% 12.6 Gráfico de dispersión (Y_real vs Y_hat) con línea identidad

figure('Name', 'Dispersión Y vs Yhat', 'Color', 'w');

scatter(Yte, YhatBag, 10, 'b', 'filled'); hold on;

scatter(Yte, YhatRF, 10, 'g', 'filled');

scatter(Yte, YhatSVR, 10, 'm', 'filled');

scatter(Yte, YhatNN, 10, 'r', 'filled');

plot([min(Yte), max(Yte)], [min(Yte), max(Yte)], '--k');

legend('Bagging','RF','SVR','NN','Línea identidad');

xlabel('Volatilidad real');

ylabel('Predicción');

title('Ajuste predictivo');

grid on;

set(gca, 'FontSize', 12);

% 12.7 Panorámica de errores absolutos temporales

figure('Name', 'Errores absolutos por modelo', 'Color', 'w');

plot(abs(YhatBag - Yte), 'b'); hold on;

plot(abs(YhatRF - Yte), 'g');

plot(abs(YhatSVR - Yte), 'm');

plot(abs(YhatNN - Yte), 'r');

legend('Bagging','RF','SVR','NN');

xlabel('Observaciones');

ylabel('|Error|');

title('Errores absolutos');

grid on;

set(gca, 'FontSize', 12);

% 12.8 Boxplot comparativo de errores de cada modelo

errors = [YhatBag - Yte, YhatRF - Yte, YhatSVR - Yte, YhatNN - Yte];

figure('Name', 'Boxplot errores', 'Color', 'w');

boxplot(errors, {'Bagging','RF','SVR','NN'});

ylabel('Error');

title('Distribución de errores de predicción');

grid on;

set(gca, 'FontSize', 12);

% =========================================================================

% 13. IMPORTANCIA DE PREDICTORES - RANDOM FOREST

% =========================================================================

% Usa la medida OOBPermutedPredictorDeltaError para la importancia

importance = rf.OOBPermutedPredictorDeltaError;

featureNames = Xtrain;

% 13.1 Heatmap de importancia

figure('Name', 'Importancia de Predictores - Heatmap', 'Color', 'w');

heatmap(featureNames, {'Importancia'}, importance, ...

'Colormap', parula, ...

'ColorbarVisible', 'on', ...

'CellLabelColor', 'none');

title('Importancia de predictores según Random Forest');

set(gca, 'FontSize', 12);

% 13.2 Gráfico de barras ordenadas de importancia

[sortedImp, idx] = sort(importance, 'descend');

sortedNames = featureNames(idx);

figure('Name', 'Importancia de Predictores', 'Color', 'w');

bar(sortedImp, 'FaceColor', [0.2 0.5 0.8]);

xticks(1:length(sortedNames));

xticklabels(sortedNames);

xtickangle(45);

ylabel('Importancia');

title('Importancia de predictores - Random Forest');

grid on;

set(gca, 'FontSize', 12);

% =========================================================================

% 14. GUARDADO DE MODELOS

% =========================================================================

% Guarda los modelos entrenados para uso posterior

save('modelos_volatilidad.mat', 'mdlBag', 'rf', 'mdlSVR', 'net');

% =========================================================================

% 15. CONCLUSIONES

% =========================================================================

fprintf('\n====================== CONCLUSIONES ==========================\n');

fprintf('1. La distribución de los rendimientos logarítmicos de EUR/USD\n');

fprintf(' no sigue una distribución normal, como indica la prueba de Lilliefors.\n');

fprintf('2. El análisis bootstrap proporciona intervalos de confianza\n');

fprintf(' robustos para media, varianza, asimetría y curtosis.\n');

fprintf('3. Se han comparado cuatro modelos para pronóstico de volatilidad:\n');

fprintf(' Bagging, Random Forest, SVR y Red Neuronal.\n');

fprintf('4. En términos de RMSE, MAE y R², Random Forest ha mostrado un\n');

fprintf(' rendimiento competitivo, superando a SVR y NN en varios aspectos.\n');

fprintf('5. Las redes neuronales, aunque prometedoras, requieren mayor ajuste\n');

fprintf(' de arquitectura y parámetros para mejorar su desempeño.\n');

fprintf('6. El modelo de Bagging también ha mostrado resultados sólidos\n');

fprintf(' con menor complejidad que los métodos no lineales.\n');

fprintf('==============================================================\n');

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.1075

1.0899

1.0852

1.0926

1.1064

1.1121

1.1126

1.1219

1.1267

1.1191

1.1309

1.1325

1.1310

1.1321

1.1360

1.1373

1.1356

1.1305

1.1349

1.1427

1.1422

1.1414

1.1442

1.1446

1.1438

1.1303

1.1269

1.1233

1.1143

1.1143

1.1237

1.1299

1.1323

1.1340

1.1310

1.1341

1.1325

1.1407

1.1414

1.1453

1.1442

1.1364

1.1324

1.1359

1.1291

1.1313

1.1285

1.1294

1.1368

1.1323

1.1348

1.1309

1.1325

1.1317

1.1328

1.1324

1.1283

1.1275

1.1239

1.1328

1.1285

1.1257

1.1283

1.1311

1.1292

1.1342

1.1263

1.1285

1.1313

1.1299

1.1319

1.1336

1.1291

1.1317

1.1206

1.1197

1.1246

1.1234

1.1288

1.1369

1.1319

1.1319

1.1367

1.1450

1.1450

1.1478

1.1591

1.1586

1.1566

1.1552

1.1610

1.1577

1.1606

1.1561

1.1679

1.1604

1.1595

1.1606

1.1647

1.1621

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.2209

1.2211

1.2225

1.2189

1.2192

1.2190

1.2250

1.2215

1.2179

1.2226

1.2172

1.2220

1.2151

1.2140

1.2078

1.2069

1.2146

1.2129

1.2163

1.2064

1.2005

1.2013

1.2061

1.2018

1.2118

1.2123

1.2090

1.2083

1.2099

1.2015

1.2033

1.2033

1.2033

1.1983

1.1965

1.1978

1.1946

1.1909

1.1896

1.1912

1.1870

1.1875

1.1811

1.1762

1.1775

1.1728

1.1714

1.1762

1.1796

1.1764

1.1812

1.1849

1.1931

1.1903

1.1915

1.1978

1.1900

1.1928

1.1952

1.1984

1.1925

1.1898

1.1843

1.1917

1.1966

1.2062

1.2089

1.2047

1.2074

1.2176

1.2164

1.2149

1.2154

1.2117

1.2088

1.2036

1.2104

1.2127

1.2118

1.2128

1.2116

1.2117

1.2048

1.2042

1.1962

1.2034

1.2042

1.2059

1.2136

1.2121

1.2108

1.2160

1.2137

1.2167

1.2162

1.2104

1.2128

1.2076

1.2078

1.2156

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.0856

1.0889

1.0791

1.0808

1.0856

1.0962

1.1029

1.1046

1.1140

1.1028

1.0880

1.0787

1.0721

1.0694

1.0690

1.0913

1.0996

1.1181

1.1105

1.1183

1.1267

1.1279

1.1447

1.1285

1.1239

1.1134

1.1171

1.1132

1.1025

1.0998

1.0879

1.0879

1.0852

1.0843

1.0783

1.0804

1.0791

1.0834

1.0830

1.0840

1.0871

1.0914

1.0909

1.0943

1.0980

1.0997

1.1042

1.1058

1.1093

1.1030

1.1009

1.1020

1.1016

1.1023

1.1052

1.1091

1.1082

1.1094

1.1088

1.1135

1.1149

1.1127

1.1133

1.1120

1.1105

1.1103

1.1151

1.1193

1.1158

1.1170

1.1210

1.1210

1.1197

1.1175

1.1096

1.1090

1.1087

1.1086

1.1078

1.1120

1.1111

1.1149

1.1142

1.1119

1.1128

1.1128

1.1092

1.1062

1.1057

1.1102

1.1076

1.1081

1.1077

1.1015

1.1007

1.0998

1.1018

1.1013

1.1022

1.1057

1.1072

1.1078

1.1070

1.1050

1.1021

1.1006

1.1007

1.1032

1.1016

1.1049

1.1065

1.1074

1.1126

1.1165

1.1150

1.1148

1.1110

1.1098

1.1078

1.1104

1.1129

1.1124

1.1148

1.1169

1.1122

1.1070

1.1031

1.1028

1.1040

1.1004

1.0969

1.0954

1.0970

1.0976

1.0964

1.0958

1.0930

1.0898

1.0938

1.0921

1.0941

1.1018

1.0991

1.1017

1.1040

1.1029

1.1071

1.1000

1.1073

1.1061

1.1009

1.1043

1.1046

1.1027

1.1033

1.1033

1.0972

1.0966

1.0989

1.1055

1.1077

1.1090

1.1100

1.1144

1.1078

1.1083

1.1099

1.1076

1.1089

1.1106

1.1138

1.1169

1.1212

1.1198

1.1178

1.1197

1.1198

1.1202

1.1107

1.1083

1.1074

1.1153

1.1144

1.1125

1.1145

1.1139

1.1151

1.1208

1.1220

1.1275

1.1223

1.1209

1.1257

1.1269

1.1252

1.1249

1.1206

1.1214

1.1224

1.1284

1.1277

1.1283

1.1285

1.1368

1.1368

1.1368

1.1365

1.1396

1.1366

1.1291

1.1224

1.1191

1.1217

1.1207

1.1275

1.1287

1.1329

1.1312

1.1331

1.1274

1.1219

1.1251

1.1240

1.1167

1.1130

1.1129

1.1160

1.1196

1.1202

1.1180

1.1152

1.1158

1.1170

1.1156

1.1172

1.1200

1.1203

1.1223

1.1233

1.1220

1.1191

1.1190

1.1197

1.1200

1.1176

1.1194

1.1215

1.1185

1.1148

1.1130

1.1152

1.1225

1.1255

1.1246

1.1229

1.1294

1.1280

1.1307

1.1300

1.1250

1.1273

1.1261

1.1259

1.1214

1.1220

1.1234

1.1202

1.1212

1.1217

1.1220

1.1247

1.1264

1.1311

1.1313

1.1373

1.1411

1.1349

1.1336

1.1325

1.1302

1.1325

1.1286

1.1247

1.1238

1.1192

1.1305

1.1306

1.1337

1.1374

1.1370

1.1369

1.1385

1.1358

1.1341

1.1334

1.1335

1.1340

1.1308

1.1293

1.1299

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.1738

1.1771

1.1746

1.1749

1.1775

1.1672

1.1665

1.1683

1.1628

1.1688

1.1624

1.1605

1.1592

1.1551

1.1621

1.1629

1.1581

1.1623

1.1599

1.1670

1.1706

1.1693

1.1677

1.1621

1.1537

1.1596

1.1569

1.1479

1.1437

1.1375

1.1343

1.1342

1.1408

1.1410

1.1526

1.1610

1.1597

1.1553

1.1567

1.1582

1.1658

1.1691

1.1704

1.1656

1.1641

1.1728

1.1684

1.1690

1.1718

1.1641

1.1638

1.1659

1.1709

1.1685

1.1671

1.1672

1.1742

1.1749

1.1745

1.1689

1.1654

1.1655

1.1639

1.1683

1.1567

1.1552

1.1645

1.1702

1.1655

1.1601

1.1570

1.1588

1.1622

1.1607

1.1567

1.1789

1.1743

1.1782

1.1766

1.1797

1.1773

1.1717

1.1698

1.1660

1.1690

1.1661

1.1538

1.1622

1.1650

1.1719

1.1696

1.1778

1.1790

1.1775

1.1793

1.1807

1.1837

1.1925

1.1942

1.1913

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.0732

1.0602

1.0653

1.0669

1.0575

1.0539

1.0565

1.0578

1.0620

1.0506

1.0546

1.0575

1.0585

1.0560

1.0579

1.0555

1.0535

1.0609

1.0611

1.0672

1.0598

1.0577

1.0597

1.0638

1.0654

1.0697

1.0680

1.0749

1.0781

1.0758

1.0767

1.0795

1.0692

1.0694

1.0680

1.0744

1.0730

1.0761

1.0699

1.0663

1.0630

1.0712

1.0600

1.0641

1.0610

1.0580

1.0553

1.0572

1.0530

1.0603

1.0486

1.0404

1.0457

1.0513

1.0490

1.0407

1.0455

1.0453

1.0454

1.0435

1.0423

1.0385

1.0402

1.0447

1.0412

1.0535

1.0625

1.0634

1.0559

1.0615

1.0751

1.0717

1.0762

1.0670

1.0660

1.0585

1.0647

1.0613

1.0584

1.0552

1.0556

1.0624

1.0627

1.0585

1.0625

1.0685

1.0720

1.0734

1.0850

1.0889

1.0909

1.1023

1.1038

1.1135

1.1103

1.1094

1.1053

1.0979

1.0983

1.0894

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.0682

1.0630

1.0535

1.0530

1.0556

1.0505

1.0469

1.0491

1.0538

1.0522

1.0405

1.0327

1.0337

1.0395

1.0408

1.0395

1.0302

1.0241

1.0324

1.0360

1.0392

1.0348

1.0325

1.0352

1.0208

1.0011

1.0072

1.0019

0.9960

0.9751

0.9817

0.9874

0.9883

0.9963

0.9962

1.0077

0.9964

0.9874

0.9860

0.9783

0.9771

0.9852

0.9838

0.9719

0.9773

0.9704

0.9703

0.9700

0.9741

0.9788

0.9882

0.9983

0.9824

0.9799

0.9814

0.9734

0.9592

0.9606

0.9690

0.9836

0.9837

0.9970

1.0022

1.0015

0.9999

0.9977

0.9970

1.0119

1.0039

0.9994

0.9999

0.9902

0.9926

0.9951

0.9944

1.0057

1.0012

0.9995

0.9961

0.9974

0.9965

0.9967

0.9941

1.0034

1.0088

1.0180

1.0170

1.0160

1.0258

1.0317

1.0297

1.0211

1.0195

1.0181

1.0243

1.0164

1.0164

1.0261

1.0218

1.0196

1.1226

1.1317

1.1211

1.1179

1.1243

1.1315

1.1514

1.1618

1.1387

1.1241

1.1117

1.1018

1.1080

1.1106

1.1148

1.1156

1.1040

1.1016

1.0965

1.0923

1.0904

1.0880

1.0949

1.0987

1.0931

1.0984

1.1058

1.1091

1.0982

1.0981

1.0933

1.0936

1.0825

1.0828

1.0874

1.0947

1.1009

1.1002

1.1162

1.1036

1.1075

1.1010

1.1055

1.1112

1.1083

1.1052

1.1135

1.1235

1.1164

1.1204

1.1205

1.1169

1.1340

1.1352

1.1358

1.1335

1.1246

1.1283

1.1268

1.1257

1.1324

1.1283

1.1291

1.1111

1.1237

1.1274

1.1152

1.0925

1.0987

1.0946

1.0903

1.0873

1.0975

1.1015

1.1112

1.1091

1.1147

1.1314

1.1451

1.1408

1.1355

1.1213

1.1154

1.1203

1.1265

1.1351

1.1184

1.1144

1.1200

1.1222

1.1129

1.0980

1.0886

1.0872

1.0824

1.0725

1.0733

1.0736

1.0805

1.0760

1.0684

1.0652

1.0566

1.0602

1.0658

1.0779

1.0813

1.0921

1.0976

1.0881

1.0762

1.0730

1.0832

1.0887

1.0883

1.0969

1.0924

1.0945

1.0820

1.0659

1.0865

1.0597

1.0566

1.0493

1.0635

1.0545

1.0697

1.0850

1.0843

1.1028

1.1077

1.1174